In today’s digital landscape, artificial intelligence (AI) has transformed how we interact online—unfortunately, this includes how scammers target their victims. AI-powered scams are becoming increasingly sophisticated and difficult to detect, posing significant financial and security risks. This comprehensive guide will help you understand the growing threat of AI scams and provide practical strategies to protect yourself.

The Alarming Rise of AI Scams: Understanding the Threat Landscape

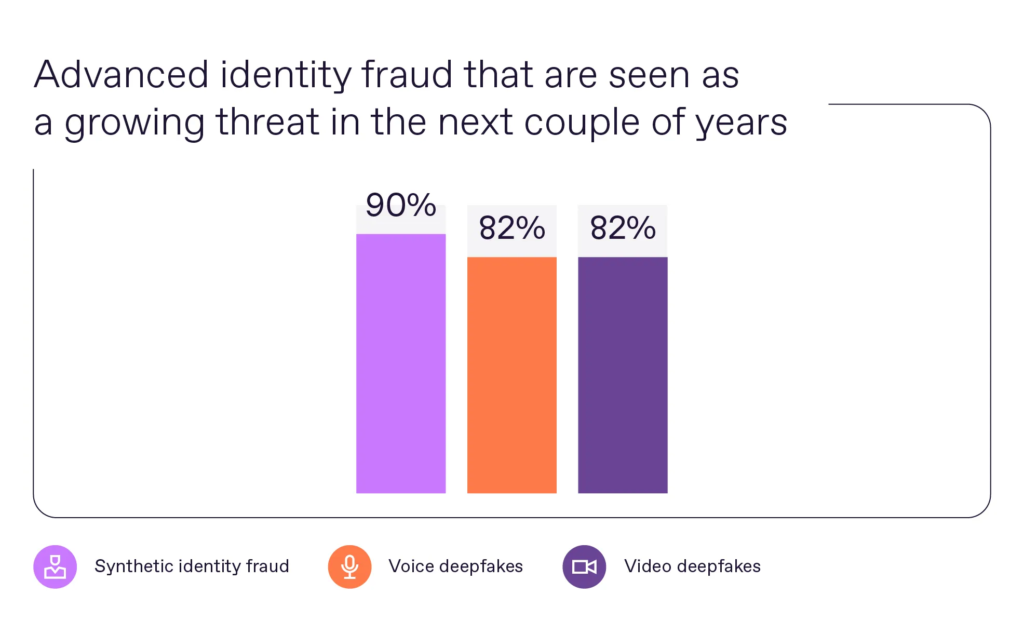

Recent statistics paint a troubling picture of AI’s role in digital fraud:

- AI-powered scams are expected to cause losses exceeding $10 trillion worldwide by 2025 University of Wisconsin-Madison

- According to Deloitte’s projections, generative AI could enable fraud losses to reach $40 billion in the United States alone by 2027, up from $12.3 billion in 2023—a compound annual growth rate of 32% Deloitte Insights

- A 2024 poll revealed that 25.9% of executives reported their organizations had experienced one or more deepfake incidents Incode

- 87% of Americans are worried about AI assisting scammers, with 55% reporting their concern is growing Upwind

- The use of generative AI tactics like deepfakes and deepaudio increased by 118% in business-related fraud Banking Journal

Common Types of AI-Powered Scams

1. Voice Cloning Scams

Voice cloning technology allows scammers to create convincing replicas of voices from just small audio samples. These are often used in emergency scams where the fraudster impersonates a family member in distress.

How it works: Scammers collect voice samples from social media videos, public speeches, or phone conversations. Using AI, they generate a convincing replica to call victims, typically claiming an emergency situation requiring immediate financial assistance.

Real impact: Voice cloning scams have become increasingly convincing, with victims reporting they genuinely believed they were speaking to their loved ones. The emotional manipulation combined with the authentic-sounding voice creates a powerful deception mechanism.

2. Deepfake Video Scams

Deepfake technology creates realistic but fabricated videos showing people saying or doing things they never actually did. These are increasingly used in sophisticated fraud schemes.

How it works: Scammers create convincing videos of executives, celebrities, or trusted figures that appear to endorse investment schemes, request financial transfers, or share misinformation. In corporate settings, this might involve fake video meetings with “executives” requesting urgent wire transfers.

Real impact: In early 2024, a finance employee at a multinational company lost $25 million after a video conference call with what appeared to be the company’s chief financial officer and other colleagues—all of whom were AI deepfakes Reuters.

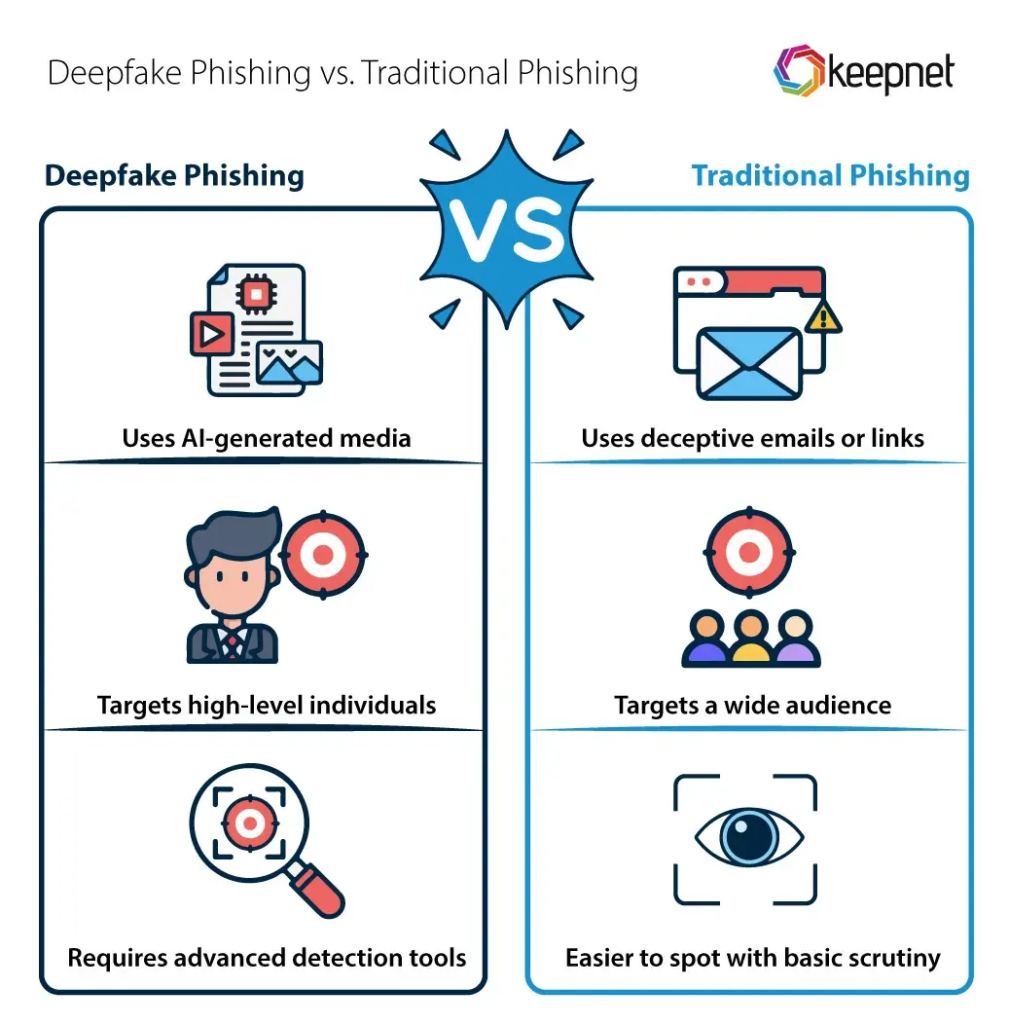

3. AI-Enhanced Phishing

Traditional phishing emails have evolved with AI assistance, becoming more personalized, grammatically correct, and convincing.

How it works: AI analyzes vast amounts of personal data to craft tailored phishing messages that reference real events, use appropriate language patterns, and exploit known interests of targets. The messages appear more authentic than traditional phishing attempts.

Real impact: AI-generated phishing campaigns have seen significantly higher success rates than traditional attacks, with some reporting click-through rates 4-5 times higher than manual attempts Harvard Business Review.

4. Fake Online Profiles and Romance Scams

AI generates convincing fake personas for romance scams and friendship fraud.

How it works: Scammers use AI to create realistic profile pictures of non-existent people, generate believable backstories, and even maintain consistent communication patterns. These fake profiles engage targets in emotional relationships before eventually requesting money.

5. AI-Generated Fake Websites and Shopping Scams

AI can now generate entire fake websites that closely mimic legitimate businesses.

How it works: Scammers use AI to clone existing websites with subtle URL changes. These sites can generate realistic product listings, fake reviews, and customer service chatbots programmed to encourage purchases or collect payment information.

How to Detect AI Scams

1. Recognize the Warning Signs

Be vigilant for these common red flags that may indicate an AI scam:

- Urgency and pressure: Scammers create a false sense of urgency to prevent critical thinking

- Unusual requests: Be suspicious if contacts suddenly ask for money, gift cards, or sensitive information

- Communication anomalies: Watch for subtle inconsistencies in speech patterns, facial movements in videos, or writing style

- New or unexpected contacts: Be wary of unsolicited messages, even if they appear to come from known entities

2. Verify Through Alternative Channels

When you receive suspicious communications:

- Call the person directly on a known phone number (not one provided in the suspicious message)

- Use video calls where you can ask contextual questions only the real person would know

- Contact organizations through official channels listed on their verified websites

- Establish verification procedures with family members, such as code words or specific questions

3. Check for Technical Inconsistencies

In AI-generated content, look for:

- Visual anomalies: Unnatural lighting, irregular shadows, blurry features, or strange artifacts in images and videos

- Audio issues: Robotic intonation, unnatural pauses, background noise inconsistencies, or audio that doesn’t match lip movements

- Website irregularities: Misspelled URLs, missing HTTPS security, grammatical errors, or limited functionality

- Inconsistent details: Information that contradicts known facts or includes outdated references

Practical Protection Strategies

1. Strengthen Your Digital Security Foundation

Start with these essential security practices:

- Use strong, unique passwords for each account and consider a password manager

- Enable multi-factor authentication (MFA) wherever available

- Keep all devices, software, and apps updated with the latest security patches

- Install reputable antivirus and anti-malware protection

2. Control Your Digital Footprint

Limit what AI scammers can learn about you:

- Regularly review privacy settings on social media accounts

- Consider making accounts private or limiting what personal information is publicly visible

- Be cautious about sharing family details, travel plans, and daily routines

- Regularly search for your own information online and request removal where possible

3. Implement Verification Protocols

Create systems to confirm identities:

- Establish secret code words with family members to verify identity during unexpected requests

- Ask verification questions that only the real person would know the answer to

- For businesses, implement formal verification procedures for financial transfers or data access

- Wait for in-person confirmation for unusual or high-value requests

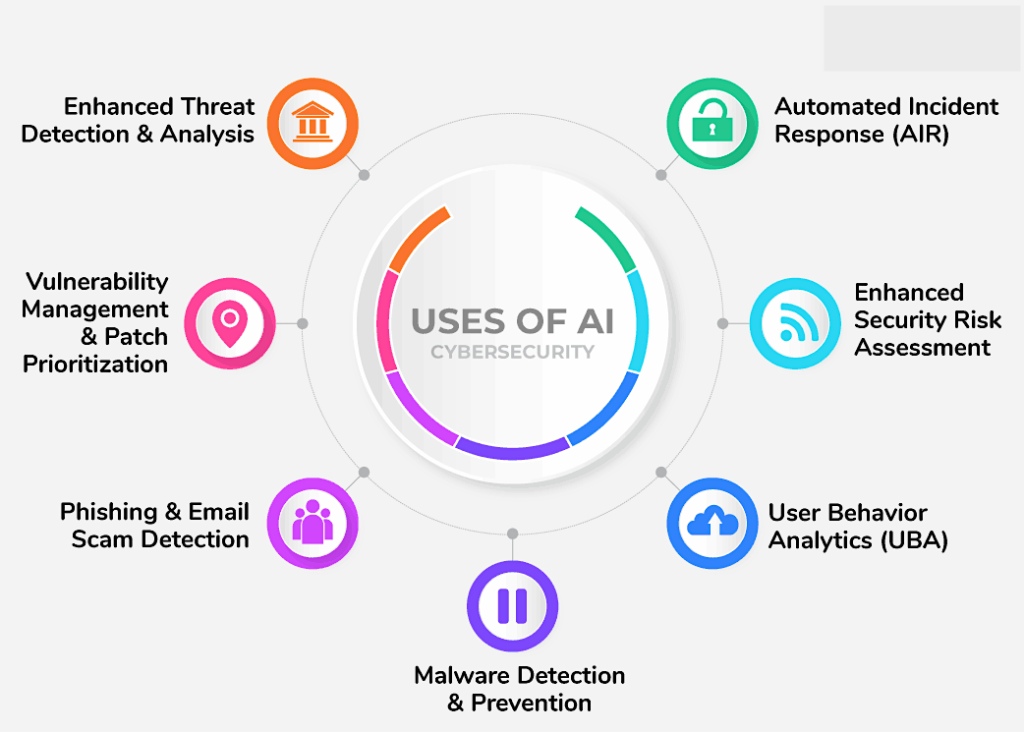

4. Utilize AI Detection Tools

Fight AI with AI:

- Use AI-powered fraud detection services that can identify synthetic content

- Try deepfake detection tools that analyze videos for manipulation markers

- Consider voice authentication systems for sensitive transactions

- Install browser extensions that help identify suspicious websites

5. Stay Informed and Educated

Knowledge is your best defense:

- Follow cybersecurity news sources to stay updated on emerging AI scam techniques

- Participate in security awareness training if offered by your employer

- Share information about new scams with friends and family, especially vulnerable populations

- Practice healthy skepticism about unexpected communications

Special Considerations for Vulnerable Groups

Protecting Older Adults

Seniors are often targeted by AI scams. To help protect older family members:

- Establish regular check-in routines for financial decisions

- Create simple verification systems they can easily remember

- Educate them about common scam techniques in simple, non-technical terms

- Consider monitoring services for unusual financial activity

Safeguarding Businesses

Companies face unique AI scam risks:

- Implement strict verification protocols for financial transactions

- Train employees to recognize social engineering and AI-powered impersonation

- Create clear reporting channels for suspicious communications

- Develop incident response plans specifically for AI-related fraud attempts

What to Do If You’ve Been Scammed

If you believe you’ve fallen victim to an AI scam:

- Act quickly: Contact your bank or financial institution immediately to stop payments or transfers

- Document everything: Save all communications, screenshots, and evidence

- Report the incident: File reports with:

- Local law enforcement

- The FBI’s Internet Crime Complaint Center (IC3)

- The Federal Trade Commission (FTC)

- Your state’s attorney general’s office

- Alert credit agencies: Consider placing fraud alerts or credit freezes

- Update security: Change passwords for all accounts, especially financial ones

- Seek support: Connect with identity theft resources and support groups

The Future of AI Scams and Protection

As AI technology continues to evolve, both scams and protection measures will become more sophisticated. Staying ahead requires:

- Continuous education about emerging threats

- Investment in AI-powered security tools

- Development of stronger verification systems

- Collaboration between technology companies, security experts, and consumers

- Potential regulation of AI tools to prevent malicious use

Conclusion

The rise of AI-powered scams represents a significant evolution in the threat landscape, but with awareness, vigilance, and practical protection strategies, you can significantly reduce your risk. By understanding how these scams work, recognizing the warning signs, and implementing robust security practices, you can navigate the digital world more safely.

Remember, legitimate organizations will never pressure you for immediate action, and there’s always time to verify suspicious communications through official channels. When in doubt, pause, verify, and protect yourself from becoming another AI scam statistic.

Keywords: AI scams, cybersecurity, deepfake detection, voice cloning fraud, online safety, AI fraud prevention, digital security, phishing protection, identity theft, AI security tools, online scam prevention, deepfake videos, artificial intelligence threats, cyber fraud, digital security tips